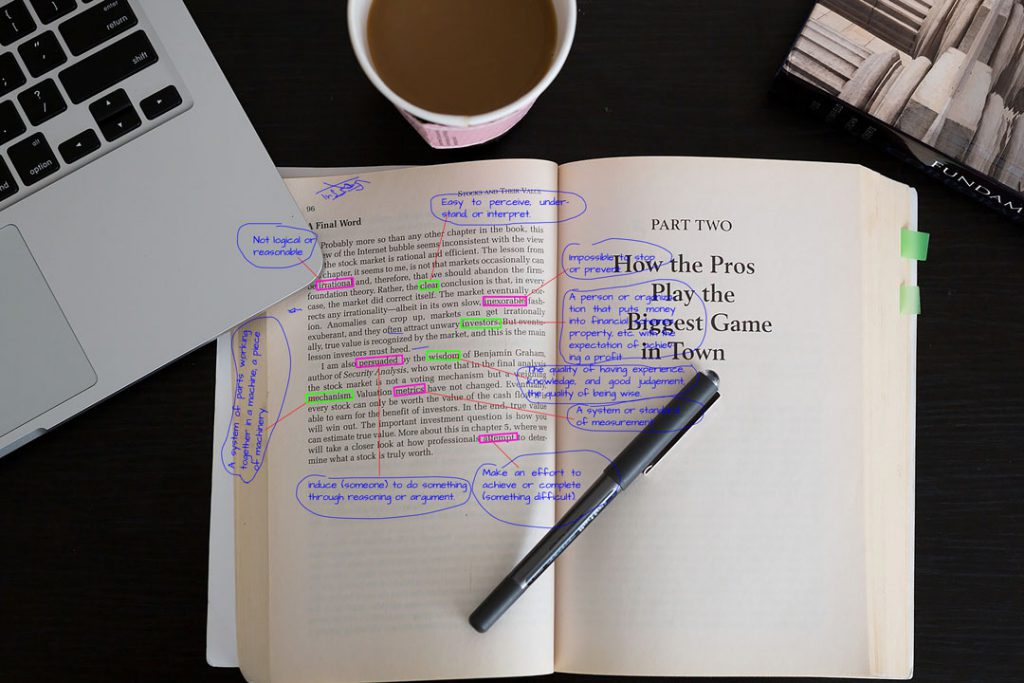

Annotation is familiar to us back from the schooling days. While reading something we used to mark doubtful areas for later references. Even the way of annotation differs according to the people, its purpose remains the same. So, in computer programming, annotation refers to documentation and the addition of comments to a logic code to make it more meaningful. In other words, image annotation is the process of tagging objects in an image with different labels to give it as data to the programs using neural network algorithms in order to enable the programs to ‘see’ and understand things like humans do. Hoping that in the future they’ll assist and work with us in solving problems and exploring the unexplored.

Will Annotations Improve Intelligence?

Humans can learn, understand, reason, form concepts, apply logic, and make decisions. It is with our intelligence we have these cognitive abilities. Through artificial intelligence, we look to emulate this type of intelligent behavior in machines. So, we want the machines to work for us, assist us, and even work with us. This could be the cure to solve many of the complex human development issues.

For machines to behave like a human, they need to be trained by data sets called training data sets. Annotation is the way of labeling these data. Text annotation, audio annotation, video annotation, image annotation are some of the types of annotations. Using more training data can increase the accuracy of the algorithm. For example, let us consider the case of a car geek. Such an expert can easily spot a car and tell which one it is in no time. And a man less into cars will do this only at a slower pace. The thing that separates a car geek from a normal man is the knowledge about cars. A machine trained with more training data will be similar to such a geek in the car spotting game. And a program with less training data will not be able to reach that performance level.

Teaching machines to ‘see’ as we do

Cameras take photos by converting light into a two-dimensional array of numbers called pixels. However, taking pictures is not the same as seeing. Seeing really means understanding. We can recollect stories of people, places, and things the moment we lay our gaze on them.

For a machine to see, name objects, identify people, infer geometry of things, or understand situations like we do computer vision is required. Similar to how a human child learns to do it through his/her real-life experiences and examples. Giving the same kind of training we can also make this happen in machines also.

But a single object could look different when perspective changes. So multiple data should be given to the system to identify a single object, this is where image annotation comes to play.

Why Is it Done?

Computer vision is a field of artificial intelligence, and image annotation and one of the important tasks in computer vision. With computer vision, we look to emulate vision like a human’s in machines. To look at a scene and understand what it is. We learned this from our experiences, the machines are also taught in the same way. To do this we need lots of training data.

We create these training data with image annotations. One of the basic tasks of every computer vision task is image annotation. It plays a huge role in making facial recognition, autonomous vehicles, and many other computer vision technologies possible.

Image Annotation

Artificial Intelligence and Computer Vision are highly advanced fields. But to build training data, it doesn’t ask for the same skills to make a self-driving car. However, to build these training data the annotators should properly understand the project specifications and guidelines. Every company may have different requirements.

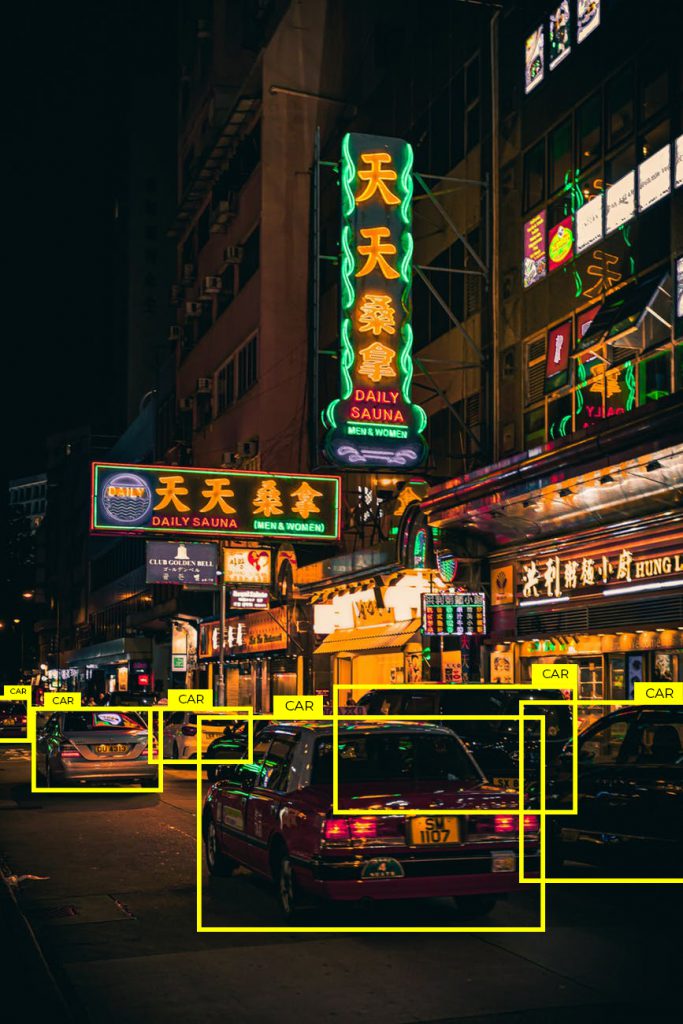

For instance, consider the given image. This contains pieces of information like what are the objects in the image and where exactly they are in the image. The annotation should provide a boundary that follows the object outline as accurately as possible. Here it is labeled cars but the labels and category may vary according to the project variations and demands. Like, Some projects may even ask the annotator to categorize the labels as cars, vans, trucks, and so on.

When the object has sharp edges it is easy to annotate. As more round or curved, the edges become we’ll have to use more points to outline the object accurately. Then we’ll have to use different techniques to get the annotations done. There are many techniques used in addition to the most commonly used bounding boxes. The project demands sometimes include the change of techniques of image annotation also.

Different Techniques in Image Annotations

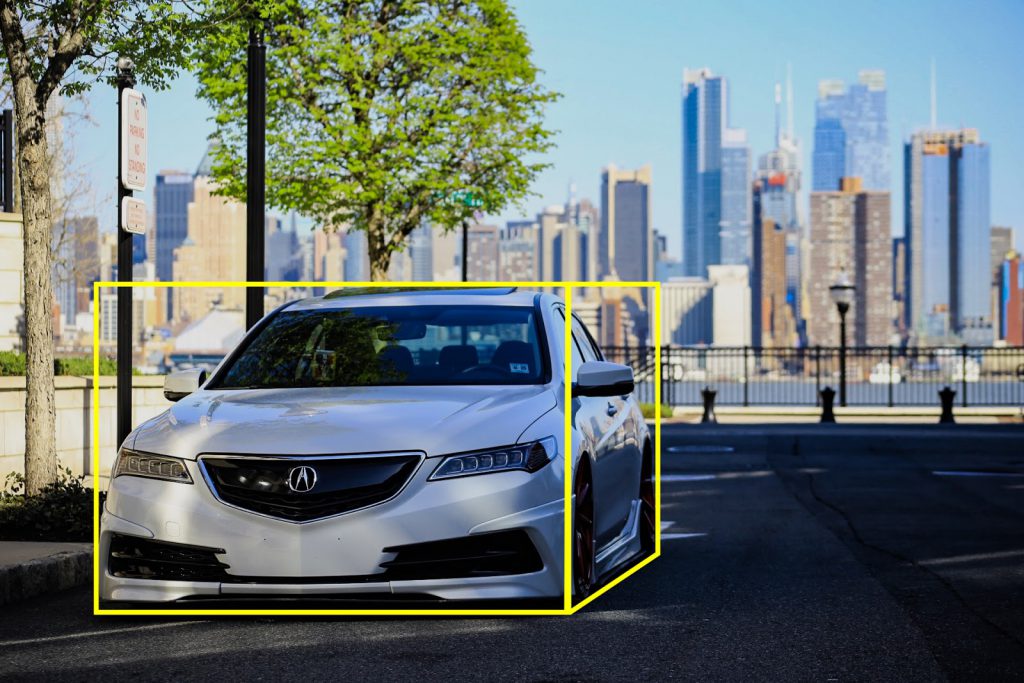

Bounding Boxes

One of the simplest techniques in image annotation. Not desired when building a high precision CV model.

Semantic Segmentation

It is one of the most accurate techniques of image annotation. Here the objects are annotated at their pixel levels. Creating training data with semantic techniques is a more time-consuming process than with bounding boxes. But one can build a much greater precise machine with these training data.

Keypoint

This technique is found as a favorite in medical and sports projects.

Cuboid/3D Annotations

This technique is used in both 2D images and 3D point cloud data. It is a little more complicated method than bounding boxes to do annotations.

Polyline

This is one of the common methods of annotations when producing data for lane detection of autonomous vehicles.

Whatever be the techniques used in annotation the reason why it is done is the same. It reduces the search area of CV models for a particular object or thing and contains information about where it exactly resides in the image.

For more on different techniques in image annotation check here

How Is it Done?

Annotators label images according to the directions given. A platform is needed to annotate all these images. There are open-source tools to do these annotations. But they haven’t always succeeded in meeting everyone’s project constraints. And the companies providing image annotation services have developed tools of their own to meet project needs. In order to fulfill the project demands companies like Infolks even customize their tools.

Steps in Image Annotation

1)Analyze project constraints

At first, before starting any annotation work, it is essential that the annotators are given an idea about the project’s demands and restrictions.

2) Select appropriate tool to do the image annotation

There are many tools available to do image annotations. However, not every tool can get the work done as the projects need it to be done. Most important step in image annotation is selecting the right tool for the work.

3) Annotate the objects in the images using appropriate techniques

The objects in images are annotated as per the project instructions and sometimes the project demands the annotator to a particular technique.

The images produced this way serve as data to train the computer vision programs and hence called training data. These training data play a major role in how your programs behave. So selecting experienced annotators or crowdsourcing companies is a crucial task too.

Why should humans do it?

Annotating images according to the machine’s task is a critical thing to mind. Machines learn to see with the trials provided to them processed through image annotations. However, annotation quality can never be compromised, maintaining which can be a hard task in automatic image annotation. Humans find it easy to annotate the images as per the context provided. Artificial intelligence has been developing and for it to carry out the simplest reasoning is still an arduous task. It has more distance to cover to go humane.

AI companies depend on crowdsourcing companies like INFOLKS for getting a large number of data annotated within the demanded deadline. Human annotated images assure AI companies that these data have been encompassed with human reasoning. And this will help in humanizing the machines.