Recent years have seen significant advancements in the field of Artificial Intelligence (AI), and Machine Learning (ML) technologies. Many people now interact with AI-enabled systems on a daily basis. AI has found its application in a range of industries like healthcare, education, construction, manufacturing, law enforcement, banking, etc. It does not replace humans, rather it augments our abilities, and makes us better at what we do. The future of artificial intelligence lies in enabling people to collaborate with machines for solving complex problems. Like any efficient collaboration, this requires communication, understanding, transparency, and trust. ML/AI models are often thought of as black boxes that are opaque, intuitive, and difficult for people to understand and interpret. Explainable AI (XAI) or interpretable AI might be the solution and is one of the best upcoming features of machine learning and artificial intelligence.

AI And Black Box

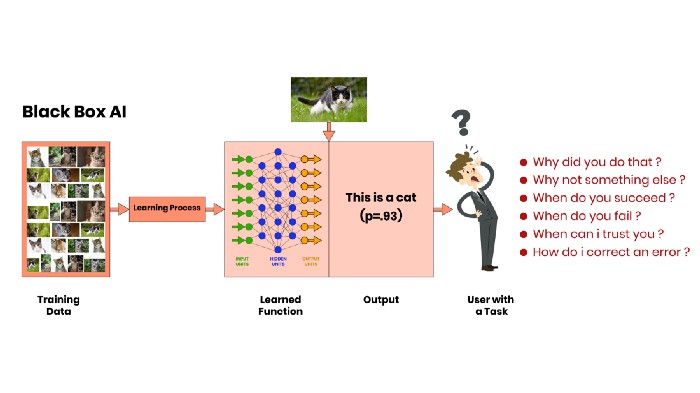

Black box AI is any artificial intelligence system whose operations are not visible to the user. It creates confusion and doubts. How can we trust the predictions are correct? And how do we avoid stupid mistakes? How can we understand and predict the behavior?. A system with the ability to explain is essential for people to understand, trust, and effectively manage the emerging generation of AI applications.

So, What Is Explainable AI?

Let’s see it through an example!

We use some data to train a model using specific learning processes. This results in a learned function, which can be then fed inputs. It outputs a prediction in a specific prediction interface. which is what the final user sees and interacts with. But what if the end-user is not certain about the output? Then the user might need to know why the model came up with such a decision in order to prevent further mistakes if any.

For instance, let us input an image of a cat into our model. And our learned function predicted it as a ‘Cat’. But what if we ask the model why it is a cat and not something else?. Despite the easiness of the question, the model is not able to explain it. This is because the predictions are made without any justification. Even if there are no errors in the prediction sometimes it is not enough for us.

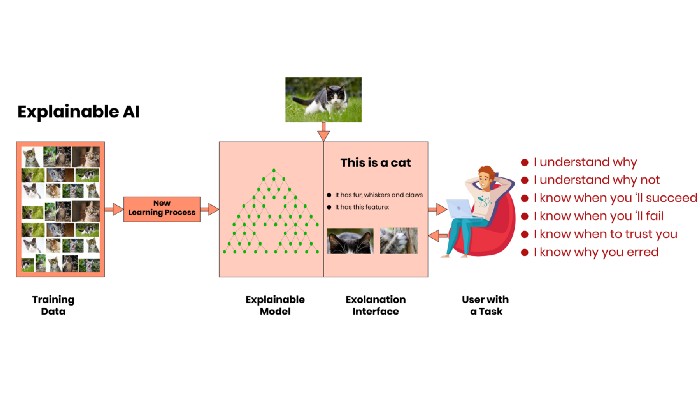

Explainable AI or Interpretable AI is the answer to our concern.

XAI aims to address how black box decisions of AI systems are made. In simple terms, AI will be transparent in its operations so that human users will be able to understand and trust decisions. The need to trust the AI-based systems with all manner of decision making and predictions is paramount. In XAI we use a different learning process and decision trees to produce output. This system is not only capable of giving us an AI that can predict, but it can also explain why such a prediction was made.

Why XAI Important?

Explainable AI is responsible AI. The more we involve AI in our daily lives, the more we need to be able to trust the decisions it makes. AI’s intelligent decisions can sometimes be at the expense of human health and safety. This is why AI systems need to be more transparent about the reasoning it makes. Decisions without explanations are not intelligent!

Some of the reasons why AI system should possess the ability of justification are:

- Give users confidence in the system: User’s trust and confidence in an AI system is the most cited reason for pursuing XAI.

- Safeguarding against bias: Transparency is necessary to check whether an AI system is using its data in ways that can result in unfair outcomes.

- Meeting regulatory standards or policy requirements: Transparency or explainability can be important while implementing legal rights around an AI system. It proves that a product or service meets regulatory standards.

- Improving system design: The ability of the AI system to explain can help developers in understanding why it has behaved in a certain way and formulate the necessary improvements.

- Estimating risk, robustness, and vulnerability: Understanding how a system works can also help in estimating risk factors. This can be a necessity when a system is deployed in a new environment and the user is not sure of its effectiveness. This is a worthy feature in safety-critical applications like airports, ATMs, and aircraft operations.

- Understanding and verifying the outputs from a system: Interpretability can be helpful while verifying the outputs of an AI system. This can clearly explain how both modeling choices and data used can affect the outcomes of the system.

What Are The Challenges?

Like a coin, XAI also has two faces. So far we discussed its pros. Now let us see some of its cons:

- Confidentiality: Sometimes algorithms, input data, or the way of decision making might be trade secret or confidential. So, making the whole process more transparent can make the data more exposed, increasing the risk of its security.

- Injustice: We may get the ways an algorithm is working; but, we need an explanation for how the system is consistent with the legal or ethical code.

- Unreasonableness: Algorithms that use authorized data to make decisions that are not reasonable. How to confirm that the prediction made is right if it is made based on an AI system? So the system needs even better transparency!

What Are The Possible Workarounds?

- Model-specific method: Using this method, the internal workings of the model are treated as a white box. It means we get explicit knowledge about the internal process. Here local interpretations focus on specific data points, while global interpretations are focused on more general patterns across all data points.

- Model-agnostic method: Model-agnostic methods are considerably more flexible as compared to model-specific methods. It is generally applied to complete class learning techniques. With this approach, the internal workings of the model are treated as an unknown black box.

What We Do?

Artificial intelligence (AI) makes it possible for machines to learn from experience, change to new inputs, and perform tasks. A large amount of data is required to train the system to predict correctly and to enable explainable AI. Being a data labeling service provider, we process quality training data for your models. If you are needy, feel free to let us know about your data labeling requirements.